Events

We are hosting and contributing to a number of interdisciplinary research and public outreach events.

Issues in Explainable AI (XAI)

Workshop Series “Issues in XAI #?”

The spreading of increasingly opaque artificial intelligent (AI) systems in society raises profound technical, moral, and legal challenges. The use of AI systems in high-stakes situations raises worries that their decisions are biased, that relying on their recommendations undermines human autonomy or responsibility, that they infringe on the legal rights of affected parties, or that we cannot reasonably trust them. Making AI systems explainable is a promising route towards meeting some of these challenges. But what kinds of explanations are able to generate understanding? And how can we extract suitable explanatory information from different kinds of AI systems?

The workshop series Issues in Explainable AI aims to address these and related questions in an interdisciplinary setting. To this end, the workshops in this series bring together experts from philosophy, computer science, law, psychology, and medicine, among others.

The series has been initiated by the project Explainable Intelligent Systems. Since its first iteration at Saarland University it has been hosted by the Leverhulme Centre for the Future of Intelligence (Cambridge), TU Delft, and the project BIAS (Leibniz University Hannover). Coming iterations will be held at TU Dortmund, the Center for Perspicuous Computing (Saarbrücken-Dresden), and University of Bayreuth.

Since the series keeps expanding, we now appointed a steering committee (SC) consisting in some of its initiators: Juan M. Durán (Delft), Lena Kästner (Bayreuth), Rune Nyrup (Cambridge), and Eva Schmidt (Dortmund). If you are interested in hosting one of the upcoming events, please just get in touch with one of the SC members.

Past events

Issues in XAI #6: Ethics and Normativity of Explainable AI (Paderborn, 15-17 May 2024)

Issues in XAI #4: Explainable AI: between Ethics and Epistemology (Delft University of Technology, 23 – 25 May 2022)

Issues in XAI #3: Bias and Discrimination in Algorithmic Decision-Making (Hanover, October 8th – October 9th 2021)

Issues in XAI #2: Understanding and Explaining in Healthcare (Cambridge, May 2021)

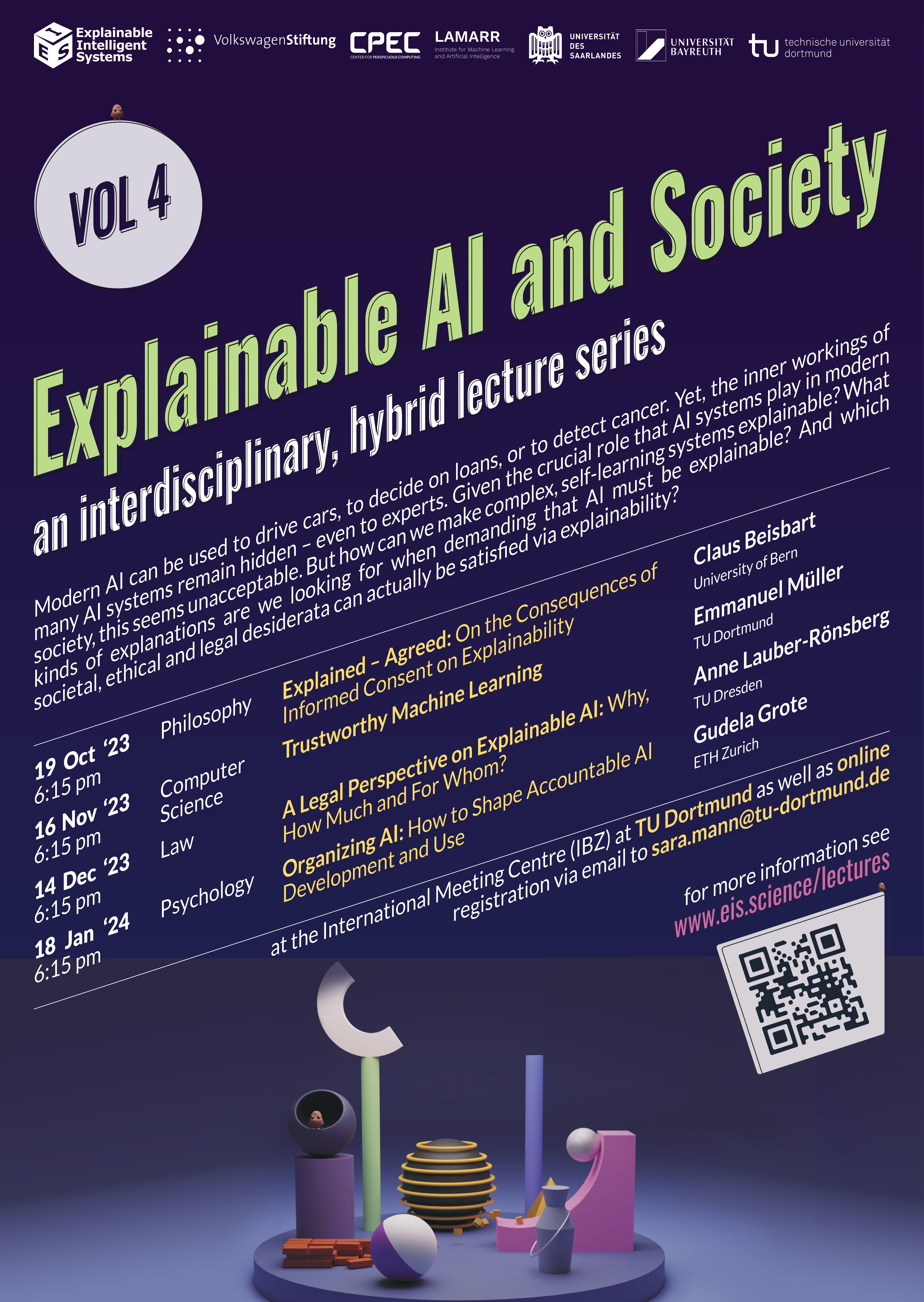

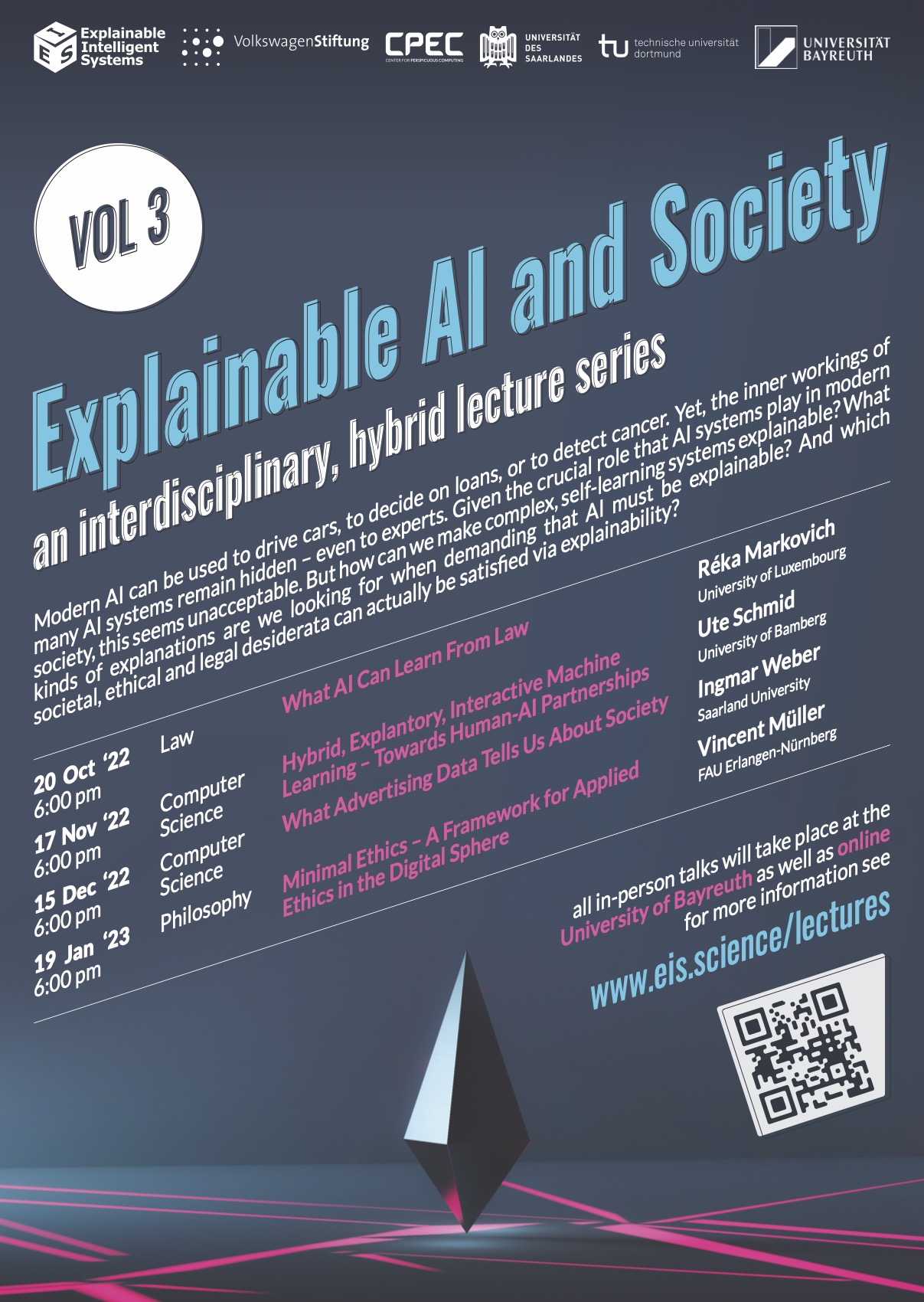

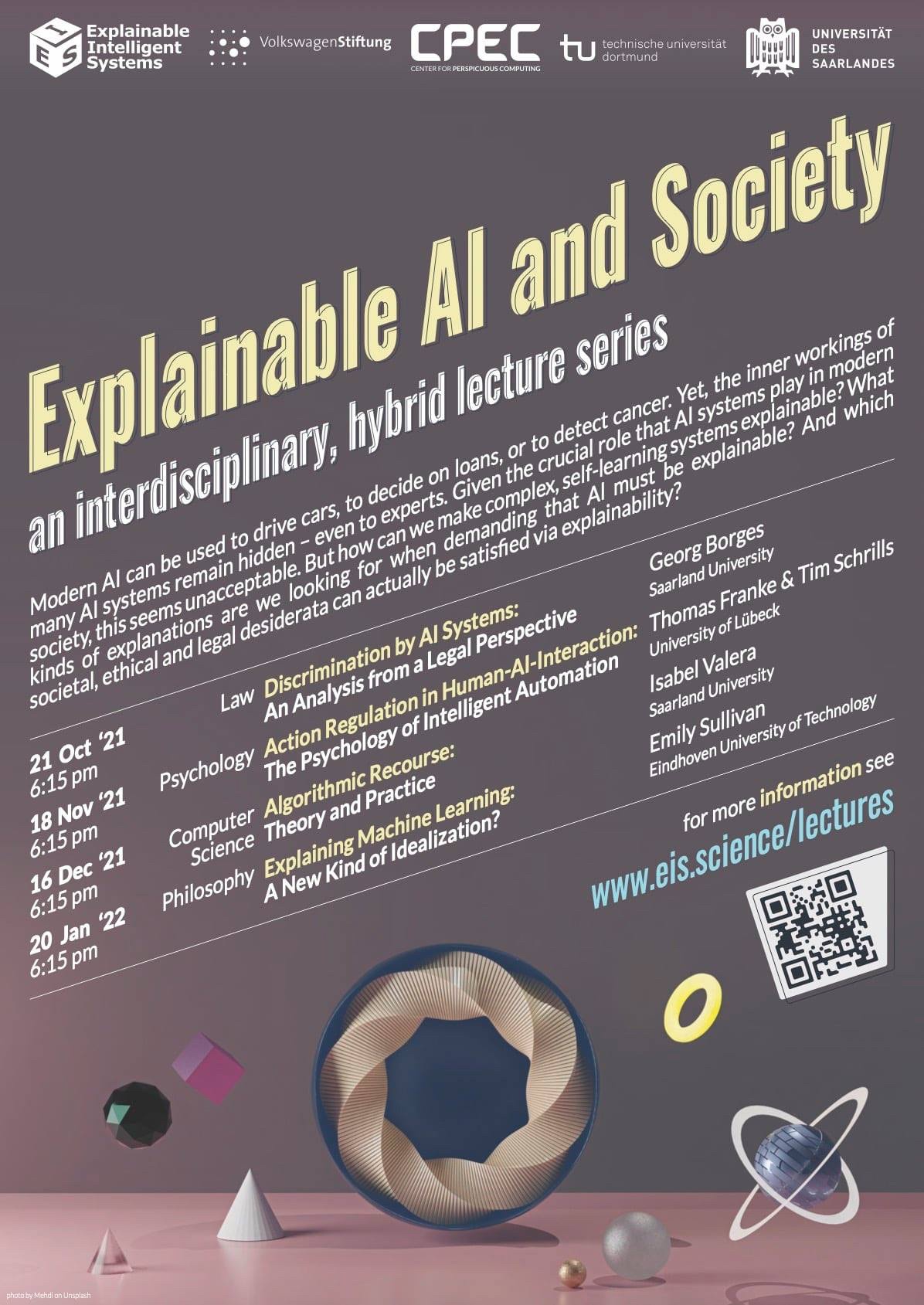

Explainable AI and Society

An Interdisciplinary Lecture Series (hybrid)

Modern AI can be used to drive cars, to decide on loans, or to detect cancer. Yet, the inner workings of many AI systems remain hidden – even to experts. Given the crucial role that AI systems play in modern society, this seems unacceptable. But how can we make complex, self-learning systems explainable? What kinds of explanations are we looking for when demanding that AI must be explainable? And which societal, ethical and legal desiderata can actually be satisfied via explainability?

Current Lectures

The current Explainable AI and Society lecture series takes place at Freiburg University in Fall 2024. The event is organized by the Chair of Arbeits- und Organisationspsychologie at the University of Freiburg. The lectures take place at 6 pm (s.t.) in the Hörsaal der Psychologie, Engelbergerstr. 41c, 79106 Freiburg. The events will be hybrid; participation is possible both on site and online.

The speakers are:

| 5 Dec ’24 6:00 pm |

Illuminating the complexities of Trustworthy AI with the help of the Trustworthiness Assessment Model (TrAM) Psychology |

Nadine Schlicker University of Marburg |

| 9 Jan ’25 6:00 pm |

Does the European AI Act tame the AI Monster? Computer Science |

Holger Hermanns Saarland University |

| 6 Feb ’25 6:00 pm |

CANCELLED

|

Kevin Baum German Research Center for Artificial Intelligence (DFKI) |

Former Lectures

Click on the poster for more information about the former lectures.

Other Events

As well as our regular lecture series (Explainable AI and Society) and workshop series (Issues in Explainable AI), the EIS team organise a range of other events.

23.03.24: Künstliche Intelligenz und Ethik: Navigieren im digitalen Dilemma. Saarbrücken.

23-28.10.23: Responsible and Trustworthy AI Track, AISOLA. Crete.

17-22.07.23: Philosophy and Computer Science Summer School. Bayreuth.

11-13.07.22: Intelligent Systems in Context. Bayreuth.

02.10.19: Erklärbare Intelligente Systeme: Verstehen, Vertrauen, Verantwortung. Saarbrücken.